Table of Contents

QOS Linux TC

netfilter qos linux tc networking

The aim of this article is to implement a traffic control system out of a server. Assuming that the server is in DMZ and simultaneously serve a local area network and the net

+----------+ +--------+ | Internet |=======>[ SERVER ]<=======| LAN | +----------+ +--------+

The net is 1Mbit / s and LAN 100Mbits / s. Services are of three types:

- dns, snmp, ntp udp … essential services but without the bandwidth requirements;

- ssh, which is essential whatever happens;

- ftp services, web, mail expensive bandwidth but may be slowed down.

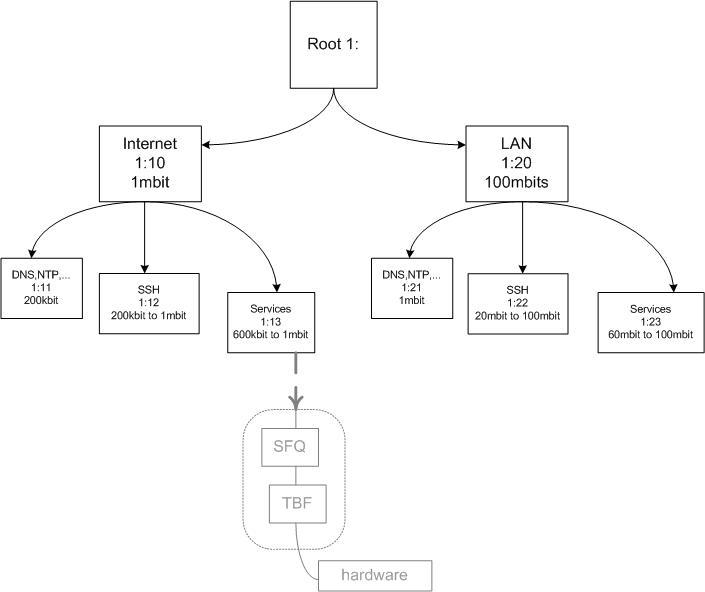

Internet and LAN have different maximum bandwidths, we will then create two separate sub-trees with their own rules.

This is how we proceed:

1. Netfilter allows you to mark individual packages. This mark appears in the “nfmark” of the structure sk_buf representing each packet in the kernel. We will therefore use this to categorize our outflows.

2. tc, the binary traffic control, will allow us to create our tree root and the two sub-trees and their leaves.

3. To test this: iptraf, telnet and vmware.

Netfilter MARK

Netfilter allows you to interact directly with the structure of a package in the kernel. This structure, sk_buff, has a field __u32 nfmark “ that we will fill up and that will be read by the TC filter to select the destination class of the packet.

Considering that “$IPT” is the iptables binary and “$LAN” is the LAN address (eg 192.168.1.0/24), the following set of rules sort packets in 6 categories:

echo "INTERNET QOS Layer # 1: snmp, dns, ssh" $ IPT-t mangle-A OUTPUT-p udp! -d $ LAN-m multiport - sports 53123161:162-j MARK - set-mark 1 echo "INTERNET QOS Layer # 2: ssh" $ IPT-t mangle-A OUTPUT-p tcp! -d $ LAN - sport 22-j MARK - set-mark 2 echo "# INTERNET QOS Layer 3 services http, https, ftp, ..." $ IPT-t mangle-A OUTPUT-p tcp! -d $ LAN-m multiport - sports 20:21,25,80443-j MARK - set-mark 3 # Mark on the local network echo "LAN QOS Layer # 1: snmp, dns, ssh" $ IPT-t mangle-A OUTPUT-p udp-d $ LAN-m multiport - sports 53123161:162-j MARK - set-mark 4 echo "# LAN Layer 2 QoS: ssh" $ IPT-t mangle-A OUTPUT-p tcp-d $ LAN - sport 22-j MARK - set-mark 5 echo "# LAN QOS Layer 3 services http, https, ftp, ..." $ IPT-t mangle-A OUTPUT-p tcp-d $ LAN-m multiport - sports 20:21,25,80443-j MARK - set-mark 6

There are 3 categories of streams and two networks, which makes 6 categories. It only marks outflows in the OUTPUT chain (which leaves us POSTROUTING to log, just in case).

Traffic Control

In Theory

Traffic Control (TC) is a prioritized system queue that control how packets are handled by the kernel. The basis of the TC Queuing Discipline (qdisc), which represents the scheduling policy applied to a queue. There are different qdisc. As for the scheduling processor, we have the methods FIFO, FIFO with multiple queues and FIFO with Round Robin at the exit (SFQ). There is also a Token Bucket Filter (TBF), which assigns tokens to a qdisc to limit the flow (no token = no transmission = we wait until we have one available).

The policy we'll use mixes these techniques. It is the HTB, Hierarchical Token Bucket. This is an algorithm that combines the SFQ and TBF in the leaves of a tree.

HTB is the subject of Chapter 7 of the Traffic Control HOWTO (http://tldp.org/HOWTO/Traffic-Control-HOWTO/classful-qdiscs.html). You should read it. Note: it is best to start by reading chapter 6 to understand the concept of HTB (http://tldp.org/HOWTO/Traffic-Control-HOWTO/classless-qdiscs.html).

In Practice

We must first create a root to the tree. The root contains:

- the name of the network interface (eth0)

- the identifier of the root (here 1). 0 (zero) is the default root that doesn’t apply any policy (packets are then going at the speed of the wire, or the kernel if it’s slower).

- the policy to apply, which we decided on being “HTB”

- and the default leave number (class) of the tree (for default classification of packets)

tc qdisc add dev eth0 root handle 1: HTB default 13

On this root, we will create two classes: one for Internet and one for the LAN. Each of these branches has a classid identifier (attached to the root) and a rate.

tc class add dev eth0 parent 1:0 classid 1:10 HTB rate 1Mbit mtu 1500 tc class add dev eth0 parent 1:0 classid 1:20 HTB rate 100Mbit mtu 1500

10 will be the Internet branch, and 20 LAN branch.

Now, on these branches, we will create classes (leaves). For each branch, we have 3 leaves, as defined in the Netfilter MARK. Each class has its own speed, limited, and the sum of 3 equal the branch rate. We will add a rule “ceil” that allows a class to borrow bandwidth if it’s not used by another class. Finally, we define priorities. The UDP class is first (priority 1), but its speed is limited without possible borrowing. Then comes the SSH (priority 2) to avoid ending up with a console freezing when a transfert is in progress. Finally, the services (priority 3). They will be the last because they are the most greedy and most likely to congest the interface.

Therefore:

tc class add dev eth0 parent 1:10 classid 1:11 HTB prio 1 rate 200kbit tc class add dev eth0 parent 1:10 classid 1:12 HTB rate 1Mbit ceil 200kbit prio 2 tc class add dev eth0 parent 1:10 classid 1:13 HTB rate 1Mbit ceil 600kbit prio 3

and

tc class add dev eth0 parent 1:20 classid 1:21 HTB rate 1Mbit prio 1 tc class add dev eth0 parent 1:20 classid 1:22 HTB rate 20mbit ceil 100Mbit prio 2 tc class add dev eth0 parent 1:20 classid 1:23 HTB rate 60mbit ceil 100Mbit prio 3

We now have on one hand a tree of traffic control, and another hand the marking of packets. It remains to connect the two. This is done with the filtering rules. These rules are very simple. We simply tell TC to look at (handle) the packets bearing the mark 1 to 6, and to apply to them the corresponding policy. An important point however: the filters must be attached to the root of the tree. Otherwise, from what I saw, they are not taken into account.

First, the filters for the LAN.

tc filter add dev eth0 parent 1:0 protocol ip prio 1 handle 4 fw flowid 1:21 tc filter add dev eth0 parent 1:0 protocol ip prio 5 handle 2 fw flowid 1:22 tc filter add dev eth0 parent 1:0 protocol ip prio 3 handle 6 fw flowid 1:23

Then the internet.

tc filter add dev eth0 parent 1:0 protocol ip prio 4 handle 1 fw flowid 1:11 tc filter add dev eth0 parent 1:0 protocol ip prio 5 handle 2 fw flowid 1:12 tc filter add dev eth0 parent 1:0 protocol ip prio 3 handle 6 fw flowid 1:13

Once the policy is in place, we can visualize the tree with the commands

tc -s qdisc show dev eth0 tc -s class show dev eth0

Or, in graphical version

The height of a leaf represents its priority (the higher the lower the priority, the faster the packet is processed). The clear gray box represents the scheduler applied to the packet in a class (see doc HTB).

Test this

To test, there's no 36 methods (as we say in French ;) ), you have to load the interface and see how it reacts. A simple method is to start netcat processes on the server and send to the client the contents of /dev/zero as fast as possible. On the client, a simple telnet on the port of netcat will do.

To see the speed of each connection, I use iptraf. This allows to observe directly the effects of launch and the cut of a particular service.

What we see in the screenshot above is the QoS on the class 13, which has a flow rate limited to 600kbit/s but can borrow up to 1Mbit/s. We have three TCP connections that are sharing the link, and what iptraf shows is that the rate for each connection varies between 325 and 340kbits/s (1Mbit/s divided by 3). Look at the TCP flow rate at the bottom right.

To assess the impact, you can redo this test by mixing the flows on different classes. I did the test with SSH in addition to the 3 previous TCP connections, and it works pretty well. Minimal latency (I was doing a “cat” on syslog …) while without the QOS I get disconnected from putty….

For comments / corrections / suggestions: julien (at) linuxwall.info ~~DISCUSSION~~